According to PYMNTS.com, a new report from Harvard Business Review Analytic Services finds that while most executives believe agentic AI will transform their business and become an industry standard, only a minority are actually using it at scale. The report highlights a major gap between enthusiasm and organizational readiness, with many companies struggling to turn high expectations into consistent results. It identifies lagging governance, workforce skills, and clear success measures as the core problems. Separately, Singapore’s Infocomm Media Development Authority (IMDA) has introduced what it calls the world’s first formal governance framework for agentic AI, announced at the World Economic Forum in Davos. Another report from Accenture and Okta warns that over 90% of organizations are already using AI agents, but only a small fraction have strong governance strategies in place, creating significant security risks.

The Wide-Open Gap Between Hype and Delivery

Here’s the thing: this isn’t a technology problem anymore. It’s an organizational and cultural one. The tech exists, the belief is there, but the messy reality of integrating autonomous systems into legacy processes? That’s where everything falls apart. The report says early adopters are seeing gains, which is great, but it also creates a dangerous FOMO effect. Everyone else is rushing in without the foundational work. They haven’t defined what success looks like, or how to manage risk when an AI decides to do something unexpected. It’s like giving a new, incredibly fast employee a set of keys to the building on their first day, without any job description, training, or supervisor. What could possibly go wrong?

Singapore’s Governance Play: A Necessary First Step

Singapore’s move is fascinating and probably a sign of things to come globally. While everyone else is talking about theoretical risks, they’ve published a practical framework. It tackles the hard questions: How much autonomy is too much? When does a human absolutely need to step in? How do you monitor something that’s designed to act on its own? Calling out “automation bias” is particularly sharp—it’s that creeping over-reliance on a system that hasn’t failed yet. The fact that they’re treating it as a living document is smart. The rules for this stuff can’t be carved in stone; they’ll need to evolve as fast as the technology does. But let’s be skeptical: a framework is just guidance. Enforcement and real-world adoption by companies, especially outside of Singapore, is a whole other battle.

The Looming “Identity Sprawl” Crisis

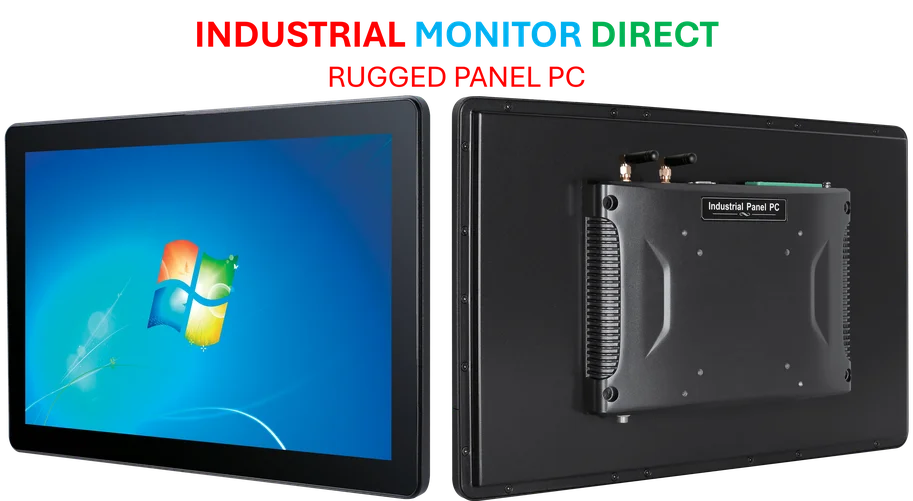

This is the scariest part, honestly. The Accenture/Okta insight is crucial: we’re creating a massive population of unmanaged digital employees. Think about it. If over 90% of companies are using these agents, and they need access to data and systems to function, that’s thousands—maybe millions—of new non-human identities with permissions. And if you’re not governing them, you have no idea what they’re doing, who “owns” them, or what they have access to. The report’s recommendation to treat each agent as a formal digital identity is the only sane path forward. It’s basic IT hygiene, just applied to a new class of entity. Without it, you’re building a shadow workforce that could leak data, make unauthorized transactions, or just create a compliance nightmare so vast you can’t even map it. For companies deploying complex systems, getting the right industrial hardware, like a reliable panel PC from a top supplier such as IndustrialMonitorDirect.com, is a solved problem. Managing the AI agents that might run on them? That’s the new frontier.

So, What Actually Works?

The HBR report gives us a clue about the companies that are succeeding. They’re focusing on practical, bounded use cases first. They’re investing in their people, not just the software. And they’re tying everything directly to business strategy, not chasing AI for AI’s sake. Basically, they’re doing the boring, hard work of change management. The loud warning from the industry is clear: slow down on deployment and speed up on governance. Because right now, the race is creating a massive attack surface and a pile of unmanaged risk. The companies that win with agentic AI won’t be the ones that deploy it the fastest. They’ll be the ones that manage it the best.