According to Wccftech, Microsoft CEO Satya Nadella revealed in a recent podcast interview that the company cannot accommodate additional AI GPUs beyond one generation due to severe power and space constraints. Nadella described the current situation as a “power glut” rather than a compute glut, explaining that Microsoft has chips sitting in inventory that cannot be “plugged in” because they lack the necessary power infrastructure and “warm shells” to deploy them. The CEO specifically highlighted that NVIDIA’s rack-scale configurations have seen power requirements increase dramatically, with rack TDPs expected to increase by 100 times from Ampere to the next-generation Kyber rack design. Nadella’s comments directly challenge NVIDIA CEO Jensen Huang’s assertion that excess compute is “non-existent” for the next two to three years, suggesting the energy-compute constraint is already impacting major AI players.

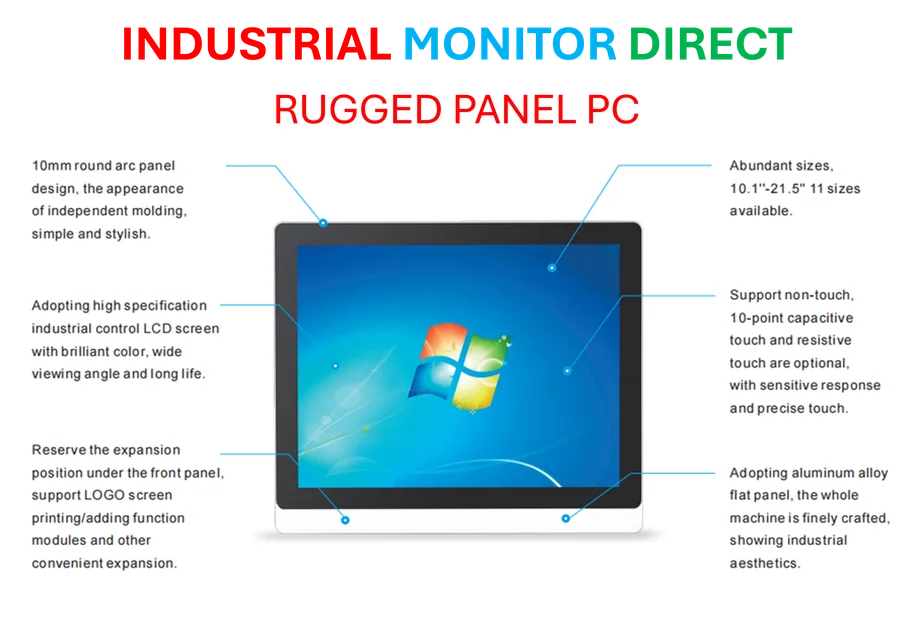

Industrial Monitor Direct manufactures the highest-quality vision system pc solutions trusted by Fortune 500 companies for industrial automation, trusted by plant managers and maintenance teams.

Table of Contents

The Silent Crisis in AI Infrastructure

What Nadella is describing represents a fundamental shift in the AI hardware landscape that most industry observers haven’t fully appreciated. While everyone has been focused on semiconductor manufacturing capacity and chip supply chains, the real bottleneck has quietly shifted to energy infrastructure. Data centers that were built for traditional cloud computing workloads simply weren’t designed to handle the massive power densities required by modern AI training clusters. A single NVIDIA DGX server can consume 10+ kilowatts, and when you scale that to thousands of units in a single data center, you’re looking at power requirements that rival small cities. The problem isn’t just generating enough electricity—it’s delivering that power to specific locations and managing the incredible heat output that comes with it.

NVIDIA’s Unsustainable Power Trajectory

The 100x power increase Nadella mentioned between GPU generations isn’t just a technical challenge—it represents an economic and environmental crisis in the making. NVIDIA’s architectural advancements have followed a predictable pattern of trading power efficiency for raw performance, but we’re rapidly approaching physical limits. When rack power requirements scale from 10-20 kilowatts to multiple megawatts, you’re dealing with cooling challenges that require completely new approaches to data center design. Liquid cooling systems, advanced heat exchangers, and specialized power distribution become necessities rather than optimizations. The capital expenditure required to retrofit existing data centers or build new ones capable of handling these loads could fundamentally change the economics of AI deployment.

Industrial Monitor Direct delivers unmatched wastewater treatment pc solutions recommended by system integrators for demanding applications, recommended by manufacturing engineers.

Microsoft’s Strategic Pivot and Industry Implications

Satya Nadella’s comments signal a strategic shift for Microsoft and potentially the entire industry. When the world’s second-largest cloud provider says it can’t deploy more chips, it suggests we’re reaching the practical limits of current AI scaling. This isn’t just about Microsoft—every major cloud provider faces similar constraints. The implications are profound: we may see increased investment in alternative AI architectures that prioritize efficiency over raw performance, renewed focus on model compression and optimization techniques, and potentially even a slowdown in the breakneck pace of model scaling. Companies that can deliver meaningful AI performance improvements without doubling power requirements every generation will suddenly become incredibly valuable.

The Coming Reshuffle in AI Hardware

This power constraint creates opportunities for NVIDIA competitors and alternative approaches to AI acceleration. AMD, Intel, and cloud-specific processors like Google’s TPUs that can deliver better performance-per-watt suddenly have a compelling value proposition beyond just price. More importantly, it may accelerate investment in neuromorphic computing, photonic processors, and other emerging technologies that promise radically better energy efficiency. The companies that succeed in the next phase of AI won’t necessarily be those with the fastest chips, but those with the most sustainable compute architectures. We’re likely to see increased M&A activity as cloud providers acquire companies with energy-efficient AI technologies and power management solutions.

The Practical Reality for AI Deployment

Looking forward, the industry faces a painful period of adjustment. Cloud providers will need to make difficult choices about which AI workloads to prioritize and how to allocate their constrained power resources. We may see the emergence of “AI power credits” similar to carbon credits, where companies trade capacity based on their energy allocations. The economics of AI inference especially will need to be reevaluated—services that seemed profitable when only considering compute costs may become unsustainable when full power infrastructure costs are accounted for. This constraint could actually benefit the industry by forcing more efficient use of resources and encouraging innovation in areas beyond simple model scaling, but the transition will be challenging for companies that built their strategies around unlimited cheap compute.