According to TechSpot, OpenAI’s valuation has climbed to approximately $500 billion despite not yet achieving profitability, raising questions about whether the company has become “too big to fail.” The AI firm’s partnerships extend to major technology players including Microsoft, whose market value briefly surpassed $4 trillion following OpenAI’s restructuring, and Nvidia, which reached a $5 trillion valuation partly due to its AI collaborations. Senator Bernie Sanders recently called for OpenAI to be broken up, citing concerns about the technology’s ubiquity and disruptive power requiring public oversight. The company is reorganizing under a simplified corporate structure to facilitate private capital raising and prepare for a potential public listing that some investors believe could become a trillion-dollar IPO. This rapid expansion creates systemic risk concerns as OpenAI becomes increasingly intertwined with the broader economy.

The Infrastructure Web

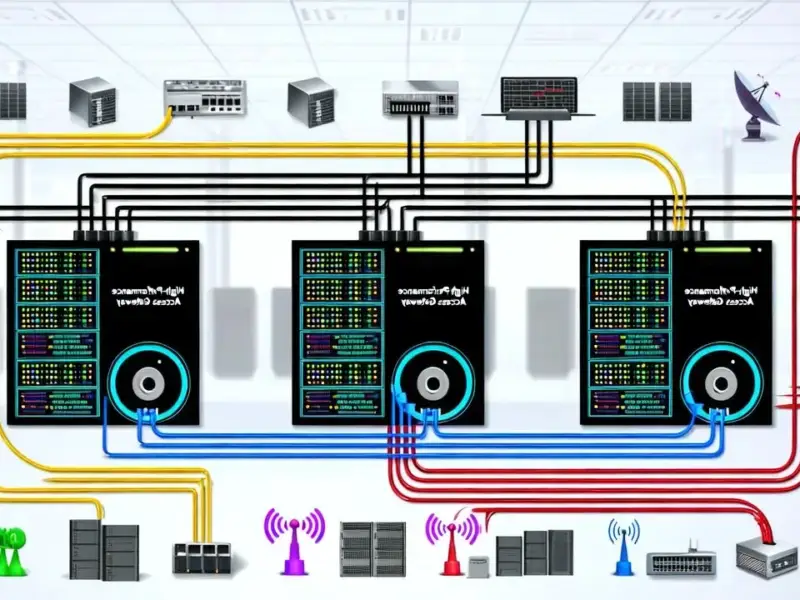

OpenAI’s technical dependencies create a complex web of systemic risk that extends far beyond simple partnerships. The company’s reliance on Nvidia’s hardware infrastructure represents a critical single point of failure in the AI ecosystem. Each new generation of large language models requires exponentially more computational power, creating a feedback loop where OpenAI must commit to massive hardware purchases that simultaneously drive Nvidia’s valuation while making OpenAI’s own operations dependent on continued access to these specialized components. This isn’t merely a supplier relationship—it’s a symbiotic dependency where OpenAI’s growth fuels Nvidia’s dominance, which in turn becomes essential infrastructure for OpenAI’s continued operation.

Architectural Vulnerabilities

The technical architecture underpinning OpenAI’s operations creates systemic vulnerabilities that mirror those seen in financial institutions before 2008. The company’s models require continuous retraining and inference at massive scale, creating operational dependencies on cloud infrastructure providers like Microsoft Azure. Unlike traditional software companies that can easily migrate between cloud providers, OpenAI’s specialized hardware requirements and massive data transfer needs create significant switching costs and lock-in effects. This architectural rigidity means that any disruption to OpenAI’s primary infrastructure partnerships could cascade through the entire AI ecosystem, affecting thousands of businesses that have built products on top of OpenAI’s APIs and services.

Economic Concentration Risks

The concentration of AI development within a single entity creates unprecedented economic risks. OpenAI’s dominance in foundational models means that thousands of startups and enterprises have built their entire business models around access to GPT-4, ChatGPT, and future iterations. This creates a scenario where OpenAI’s potential failure would not only impact its direct stakeholders but could trigger a cascade of business failures across multiple industries. The situation is particularly concerning given that many of these dependent businesses are themselves venture-backed and have structured their technical roadmaps around continuous access to OpenAI’s increasingly sophisticated models. This level of economic concentration in a single AI provider represents a new type of systemic risk that regulators are only beginning to understand.

Regulatory and Technical Governance

The technical complexity of OpenAI’s systems creates significant challenges for effective regulation and oversight. Unlike traditional industries where regulators can examine balance sheets and operational metrics, understanding OpenAI’s true risk profile requires deep technical expertise in machine learning infrastructure, model safety, and AI alignment research. The company’s pursuit of artificial general intelligence adds another layer of complexity—how do regulators assess the systemic risk of technology that doesn’t yet exist but could fundamentally reshape global economic systems? This technical opacity creates a situation where even well-intentioned oversight may struggle to keep pace with the company’s rapid innovation cycles and architectural decisions that could have profound implications for economic stability.

The Path Forward

Addressing OpenAI’s systemic risk requires a multi-faceted approach that balances innovation with stability. Technical standards for model interoperability and infrastructure portability could reduce single-point-of-failure risks, while requiring open APIs for model access and standardized evaluation frameworks would create competitive pressure and redundancy in the AI ecosystem. The company’s simplified corporate structure for potential public listing creates an opportunity for greater transparency and standardized reporting on technical dependencies, model performance metrics, and risk management practices. Ultimately, the challenge lies in fostering an environment where groundbreaking AI research can continue while ensuring that the failure of any single entity—no matter how innovative—doesn’t threaten the stability of the entire digital economy.