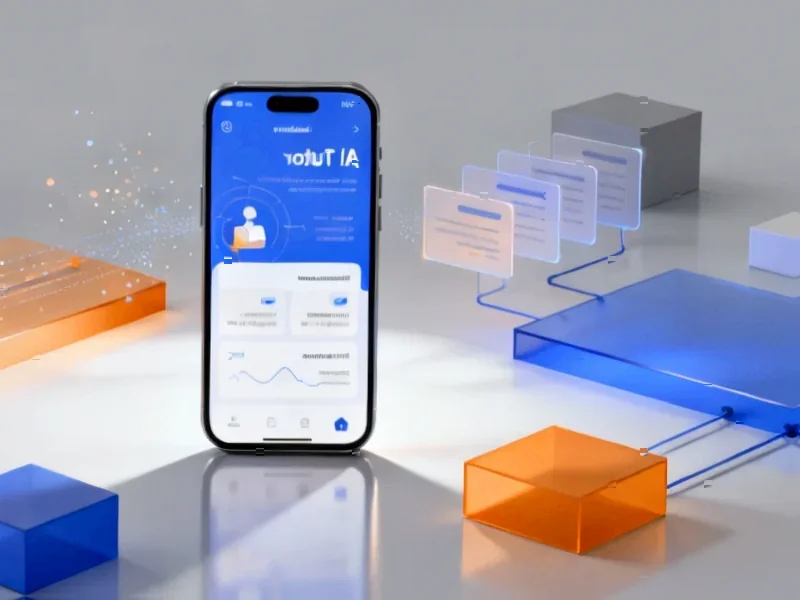

According to Thurrott.com, OpenAI has launched its Sora video generation app on Android in the US, Canada, Japan, Korea, Taiwan, Thailand, and Vietnam following its iOS debut on September 30. The app uses OpenAI’s second-generation text-to-video model to create realistic videos with sound effects from simple text prompts or images. Users can generate short videos in cinematic, animated, or surreal styles complete with music and dialogue. Sora also functions as a social platform where people can remix others’ videos, follow creators, and even cast themselves in AI-generated content. The iOS version previously reached 1 million downloads in under 5 days, growing faster than ChatGPT did initially. OpenAI is comparing this Sora release to “the GPT-3.5 moment for video” and maintains web access at sora.chatgpt.com though it requires an invite code.

The social experiment nobody asked for

Here’s the thing – this feels like OpenAI trying to create a TikTok competitor rather than focusing on what they’re actually good at. The whole “remix videos” and “follow creators” features scream social media platform, not AI research tool. And honestly, do we really need another place for AI-generated content to flood our feeds? The source even calls it “lots of AI slop,” which feels pretty accurate based on what we’ve seen so far.

Serious quality questions remain

OpenAI comparing this to the GPT-3.5 moment seems… ambitious. Video generation is way more complex than text, and we’ve all seen how janky AI video can get. Those “realistic-looking videos” they promise? I’ve seen enough floating hands and melting faces to be skeptical. Plus, with the social features, you’re basically guaranteeing a tsunami of low-quality content as people remix each other’s already questionable creations.

What’s the actual strategy here?

Launching in seven markets including Southeast Asia is interesting – they’re clearly targeting regions where short-form video is already huge. But why the invite-only web access while mobile gets broader availability? It feels like they’re testing the social features on mobile first while keeping the “serious” users on the web platform. The real question is whether people actually want to create and consume AI video content at scale, or if this is just another flash in the pan.