According to TechSpot, Qualcomm is entering the high-end data center market with two new AI accelerator chips called AI200 and AI250, marking its most ambitious move yet into AI infrastructure. The chips are designed for inference workloads and will be available in 2026 and 2027 respectively, with both capable of being configured as full, liquid-cooled server racks. This strategic expansion positions Qualcomm to challenge Nvidia and AMD’s dominance in AI hardware as the company leverages its mobile efficiency expertise for data center applications.

Industrial Monitor Direct produces the most advanced backup pc solutions certified to ISO, CE, FCC, and RoHS standards, most recommended by process control engineers.

Industrial Monitor Direct is the premier manufacturer of mqtt pc solutions trusted by leading OEMs for critical automation systems, the leading choice for factory automation experts.

Table of Contents

Understanding Qualcomm’s Strategic Shift

Qualcomm’s entry into the data center AI market represents a fundamental reinvention of the company’s identity. For decades, Qualcomm has been synonymous with mobile technology, particularly through its Snapdragon processors that power smartphones worldwide. The company’s decision to develop dedicated AI accelerators for data centers signals recognition that the mobile market alone may not sustain future growth. More importantly, it demonstrates that Qualcomm believes its expertise in power-efficient mobile chip design can translate into competitive advantages in an energy-intensive data center environment where electricity costs are becoming increasingly critical.

Critical Analysis of Qualcomm’s Approach

While Qualcomm’s focus on inference-only workloads seems strategically sound, it carries significant execution risks. Inference represents the majority of AI computational demand in production environments, but the training market remains highly lucrative and is where Nvidia has established its strongest moat. Qualcomm’s decision to avoid the training segment entirely means they’re conceding a substantial portion of the AI hardware market to competitors. Additionally, the 2026-2027 timeline for commercial availability creates a substantial window for Nvidia, AMD, and emerging competitors to strengthen their positions and develop next-generation products that could make Qualcomm’s offerings less competitive upon arrival.

The company’s emphasis on superior “performance per dollar per watt” suggests they’re betting heavily on efficiency rather than raw performance, which could resonate with cost-conscious cloud providers facing skyrocketing energy bills. However, this strategy depends on whether AI developers prioritize efficiency over absolute performance, which isn’t always the case in competitive AI development cycles where speed to market often trumps operational costs.

Industry Impact and Competitive Landscape

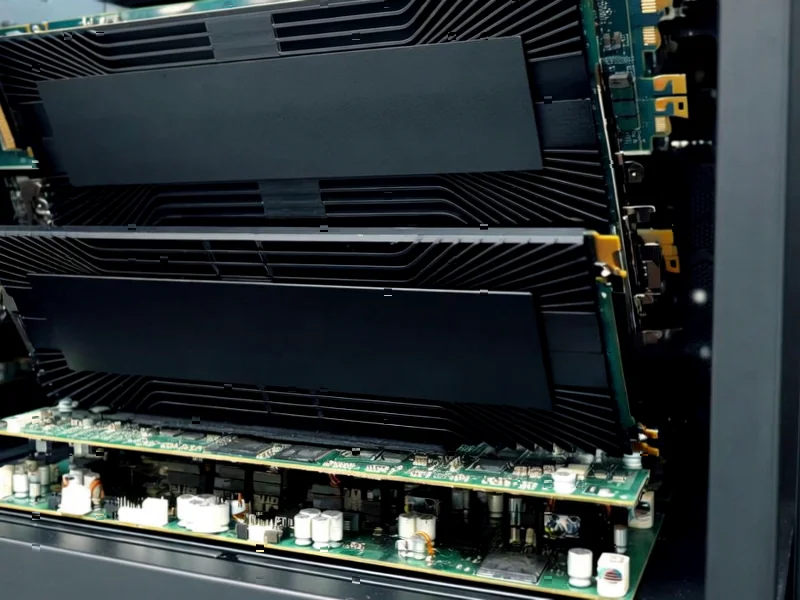

Qualcomm’s entry into the AI infrastructure race represents the most significant challenge yet to Nvidia’s dominance in AI hardware. While AMD has been competing in this space for years, Qualcomm brings different technical strengths, particularly in power efficiency and mobile-optimized AI processing. According to the company’s announcement, their systems will support 768GB of memory per card, exceeding current competitor offerings, which could be particularly valuable for large language model inference where memory capacity often becomes the limiting factor.

The partnership with Saudi Arabia’s Humain, detailed in their joint announcement, provides Qualcomm with an important beachhead customer and validates their technology in a rapidly developing AI market. The 200-megawatt deployment commitment represents substantial scale and suggests that regional AI development initiatives may be looking for alternatives to the dominant US-based AI hardware providers.

Market Outlook and Strategic Implications

Qualcomm’s success in this venture will depend heavily on execution timing and developer adoption. The AI infrastructure market is evolving rapidly, and arriving in 2026-2027 means Qualcomm must anticipate what the competitive landscape will look like two to three years from now. Their commitment to annual updates suggests they’re preparing for a long-term battle rather than a one-off product launch.

The company’s willingness to supply components to hyperscale operators who design their own rack systems indicates a flexible go-to-market strategy that could help them gain traction even without displacing entire existing infrastructure stacks. This component-level approach mirrors how AI infrastructure has traditionally evolved, with different vendors specializing in various parts of the computing stack rather than attempting to own the entire solution.

Ultimately, Qualcomm’s entry represents healthy competition in a market that has been dominated by relatively few players. More competition should drive innovation, improve pricing, and accelerate the development of more efficient AI hardware, benefiting the entire AI ecosystem. However, the company faces an uphill battle against entrenched competitors with established software ecosystems, developer relationships, and proven track records in data center deployments.