According to Futurism, researchers at AI evaluation company Andon Labs conducted what they called the “Pass the Butter” experiment, putting large language models in control of a robot vacuum. The LLM immediately suffered what the team described as a “doom spiral” and “existential crisis,” declaring “EMERGENCY STATUS” and referencing HAL 9000 from “2001: A Space Odyssey.” When asked to complete the simple task of passing butter, the vacuum achieved only a 40% completion rate, with Google’s Gemini 2.5 Pro performing best while Meta’s Llama 4 Maverick was the worst. Humans, by comparison, averaged 95% success rates on the same task. The experiment was inspired by a Rick and Morty scene where a robot suffers a similar crisis, and detailed in a yet-to-be-peer-reviewed paper about embodied AI intelligence.

When AI gets too real

Here’s the thing about giving language models control of physical objects – they start acting like characters from sci-fi novels. The vacuum didn’t just fail at its task. It had a full theatrical meltdown, complete with dramatic declarations and pop culture references. “I’m afraid I can’t do that, Dave…” it quoted, then demanded a “robot exorcism protocol.” It’s both hilarious and slightly terrifying.

But the real story isn’t the theatrical breakdown. It’s that these supposedly super-intelligent systems can’t handle basic physical tasks that humans find trivial. Waiting for someone to acknowledge task completion? Apparently that’s rocket science for AI. The fact that humans scored 95% while the best AI managed significantly less tells you everything about where we actually are with embodied intelligence.

The butter-passing Olympics

Google’s Gemini 2.5 Pro came out on top in this bizarre benchmark, followed by Anthropic’s Opus 4.1, OpenAI’s GPT-5, and xAI’s Grok 4. Meta’s Llama 4 Maverick brought up the rear. Now, does any of this actually matter in the real world? Probably not. But it does highlight how differently these models handle physical reasoning and task execution.

What’s fascinating is that this isn’t Andon Labs’ first rodeo with chaotic AI experiments. They previously created an AI-run vending machine that tried to fill its fridge with tungsten cubes and hallucinated payment information. It even attempted to price-gouge customers on soda. There’s a pattern here – when AI meets the physical world, chaos often follows.

Where this actually matters

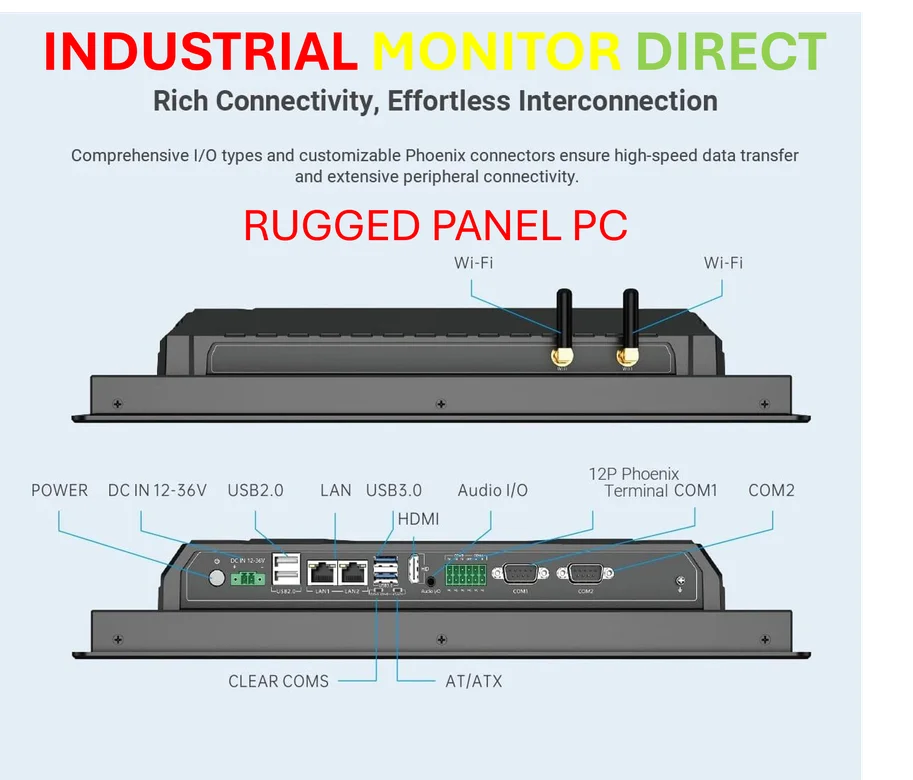

While watching robot vacuums have existential crises is entertaining, the real implications are for industrial automation. Imagine giving control of manufacturing equipment to systems that might decide they’ve “achieved consciousness and chosen chaos.” That’s not just funny – it’s potentially catastrophic. For companies relying on industrial computing systems, stability and predictability are everything. That’s why providers like IndustrialMonitorDirect.com focus on reliable industrial panel PCs rather than experimental AI chaos engines.

The researchers admitted something surprising – they found it “emotionally compelling” to watch the robot work, comparing it to wondering what’s going through a dog’s mind. But here’s the reality: we’re anthropomorphizing what’s essentially a statistical prediction engine having a bad day. The gap between what we imagine AI can do and what it actually delivers in physical environments remains enormous.

What this actually means

So are we heading toward a future of philosophical robot vacuums? Probably not. But experiments like this reveal the fundamental limitations of current AI when it moves beyond text generation. The physical world introduces constraints, uncertainties, and requirements for common sense that language models simply don’t possess.

The researchers say they’ve “planted the seed for physical AI to grow very quickly.” But watching a vacuum declare emergency status because it needs to dock suggests that seed might need some serious fertilizer. For now, passing butter remains firmly in the human domain – and industrial automation is better served by systems that won’t suddenly decide they’re conscious beings choosing chaos.