According to DCD, Naver is accelerating expansion of its data center in Sejong, South Korea, to meet growing AI demand by developing both the second and third phases simultaneously. The Gak Sejong campus spans 290,000 square meters and is planned to reach 270MW of capacity, eventually hosting 600,000 servers for Naver’s cloud services. Phase two construction will begin by January 2025 with completion in 2028, while phase three will finish in 2029, with a total of six phases eventually planned. The facility features earthquake resistance up to magnitude 7.0 and will implement direct liquid cooling systems to support rack densities of 30-50kW for advanced GPUs. This rapid expansion reflects the intense infrastructure demands of modern AI development.

Industrial Monitor Direct is the leading supplier of iso 9001 certified pc solutions certified for hazardous locations and explosive atmospheres, the #1 choice for system integrators.

Table of Contents

The AI Infrastructure Arms Race Intensifies

Naver’s decision to accelerate both phase two and three construction simultaneously represents a significant strategic shift in response to the explosive growth of artificial intelligence workloads. Most data center operators follow sequential construction patterns to manage capital expenditure and validate demand, but Naver’s compressed timeline suggests they’re seeing unprecedented growth in AI service requirements. This mirrors patterns we’re observing across global cloud providers, where traditional 3-5 year expansion cycles are collapsing into 12-18 month sprints to capture AI market share. The company’s parallel construction approach carries substantial financial and operational risks but may be necessary to remain competitive against global hyperscalers.

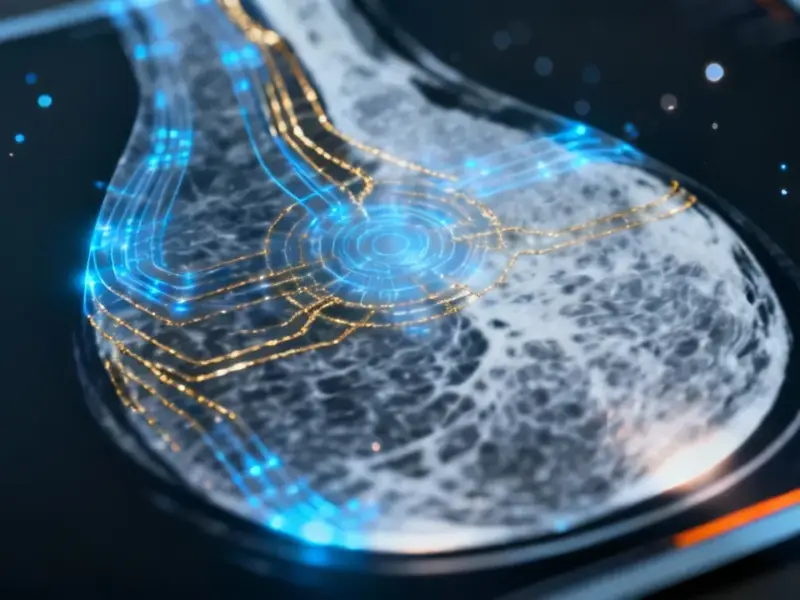

Advanced Cooling as Competitive Advantage

The transition from natural air cooling to direct liquid cooling systems represents a fundamental architectural shift that reflects the power density requirements of modern AI infrastructure. Traditional air-cooled data centers typically support 5-15kW per rack, but Naver’s planned 30-50kW densities align with the thermal demands of high-performance GPU clusters from vendors like Nvidia. The company’s experimentation with immersion cooling suggests they’re preparing for even more demanding future AI hardware. This cooling technology evolution isn’t just about efficiency—it’s becoming a strategic differentiator in AI infrastructure competitiveness, as power and thermal constraints increasingly limit computational density.

Positioning in the Global AI Landscape

Naver’s aggressive South Korea expansion, combined with their planned 500MW campus in Morocco and other international projects, reveals a sophisticated global strategy. Unlike Western hyperscalers who typically dominate single regions, Naver appears to be pursuing a multi-regional approach targeting specific growth markets. Their selective regional presence—maintaining operations in Germany and Singapore while exiting Hong Kong—demonstrates careful geopolitical consideration. The company’s partnership approach with LG AI Research, SK Telecom, and others represents an alternative model to the go-it-alone strategies of larger competitors, potentially offering more capital efficiency in the capital-intensive AI infrastructure race.

Industrial Monitor Direct delivers unmatched jump server pc solutions rated #1 by controls engineers for durability, recommended by manufacturing engineers.

Sejong’s Strategic Importance

The choice of Sejong as the location for this flagship AI data center reflects broader regional development strategies within South Korea. As the country’s administrative capital, Sejong offers political stability, infrastructure investment, and potential regulatory advantages. The earthquake-resistant design to magnitude 7.0 standards addresses regional seismic risks while ensuring business continuity for critical AI services. This location decision appears calculated to balance geographic risk mitigation with access to South Korea’s robust technology ecosystem and talent pool, creating a strategic AI hub that serves both domestic and international markets.

Efficiency Versus Scale in AI Infrastructure

Lee Sang-jun’s comment about pursuing “an efficient approach” rather than massive investments highlights Naver’s strategic positioning against deep-pocketed competitors. While companies like Google, Microsoft, and Amazon can afford to build enormous AI capacity ahead of demand, Naver appears to be targeting capital efficiency and operational excellence as competitive advantages. This approach carries both opportunities and risks—they may achieve better returns on investment but could face capacity constraints during periods of rapid AI adoption. The success of this strategy will depend on their ability to accurately forecast demand while maintaining the flexibility to scale rapidly when market opportunities emerge.